Research

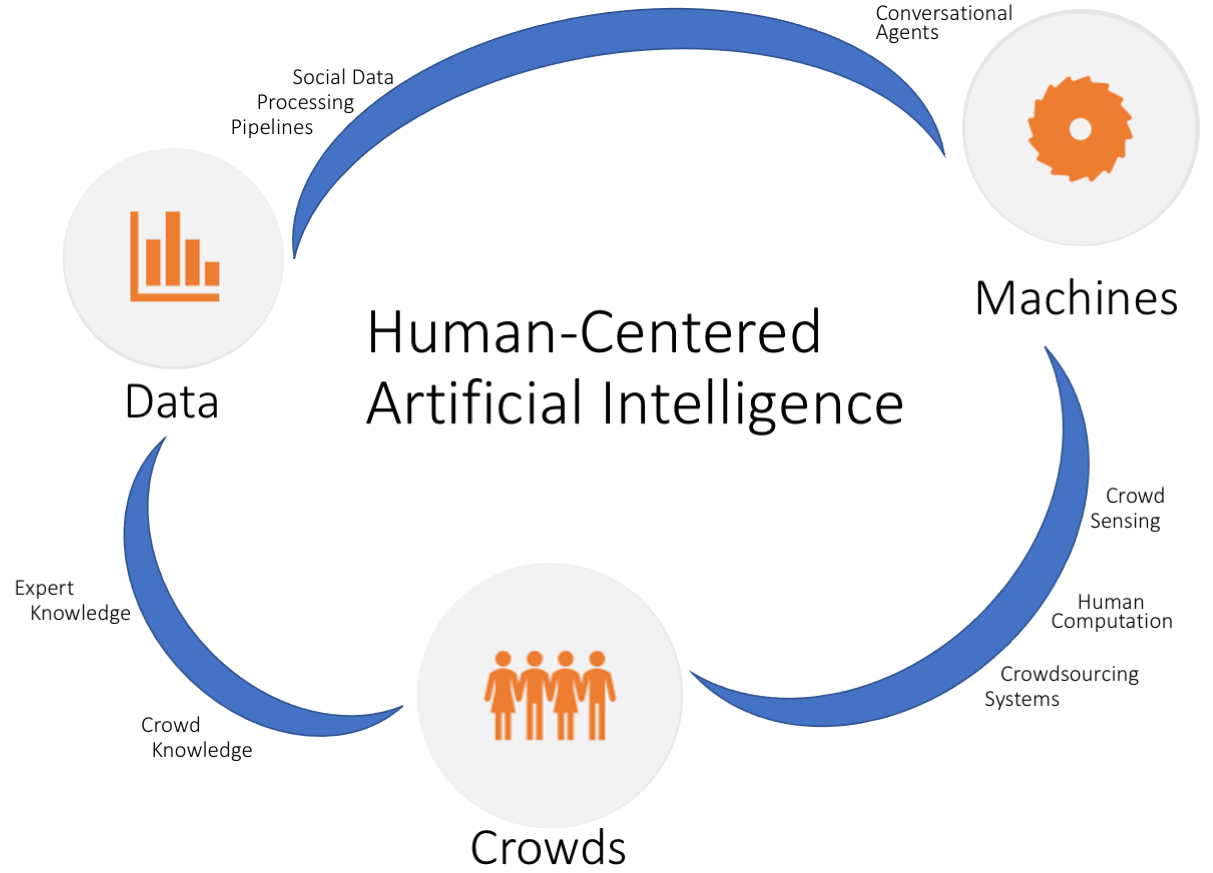

My research focuses on the devoplement and application of multimodal deep machine learning, computer vision, signal processing, and statistical methods on vast and various human-centered datasets.

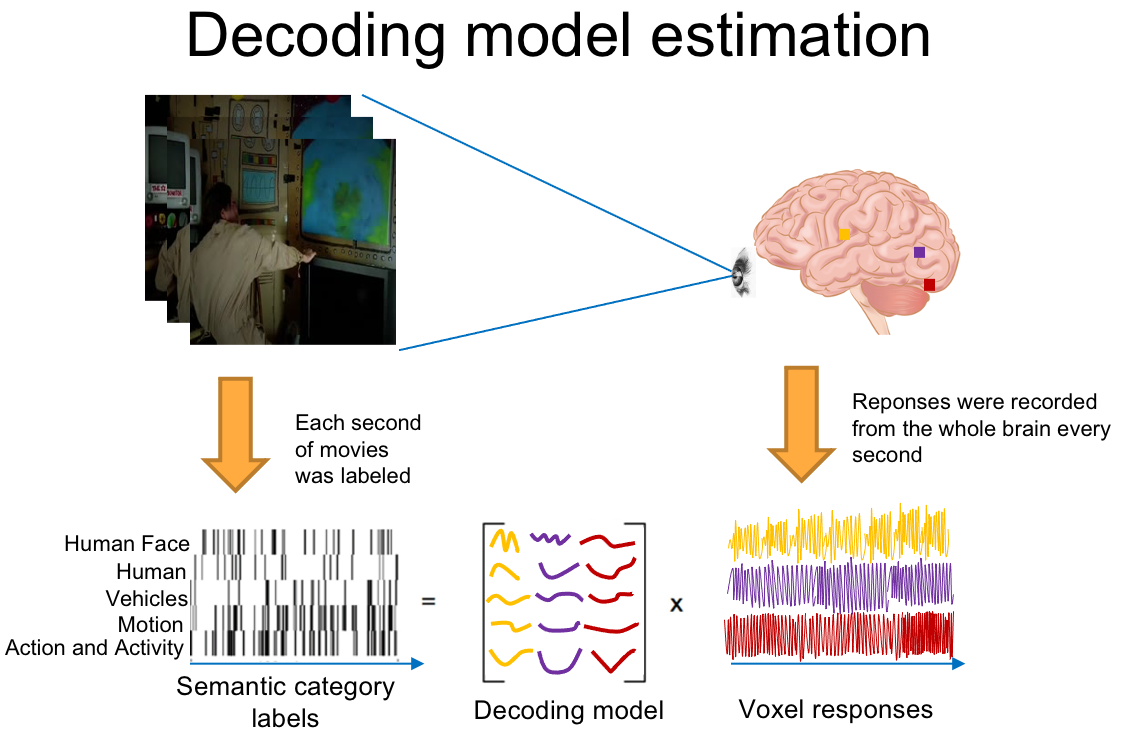

Exploring Emotions and their Dynamics in Human Brain: Brain Imaging

We investigate the neural stractures in human brain that are responsible for multiple component processes that are integrated in a dynamic manner to generate emotion episodes, such as appraisal mechanisms that process contextual information on events, as well as motivational, expressive, and physiological mechanisms that orchestrate behavioral manifestations. In our project, we aim to:

- explore brain networks that mediate different components and appraisals using fMRI, physiological, and behavioral measures during emotional events;

- develop naturalistic elicitation procedures by exploiting aesthetic and emotional movies;

- apply new signal processing and machine learing methods and approaches to uncover the neural representation of emotions.

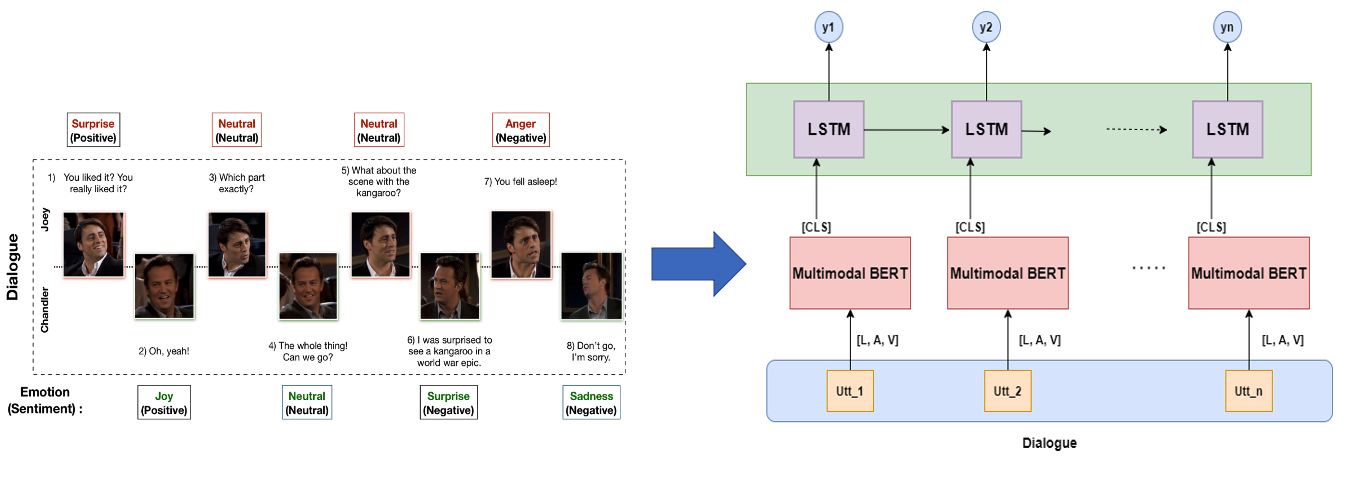

Context-Aware AI: Contextualization Modelling in Multi-party Conversations

One of the most natural forms of interactions among humans is conversation that could be defined as an oral exchange of sentiments, observations, opinions, and ideas. Emotion recognition in multiparty settings has risen many challenges, such as tracking multiple speakers, dealing with environmental noise and occlusions, and missing modalities. Moreover, emotions of individuals and groups may change over time when a conversation goes on. We aim to propose a framework for multimodal emotion recognition in multi-party settings that:

- models multimodal multi-party conversations;

- models contextualization at utterance and dialogue level.

Healthcare Informatics

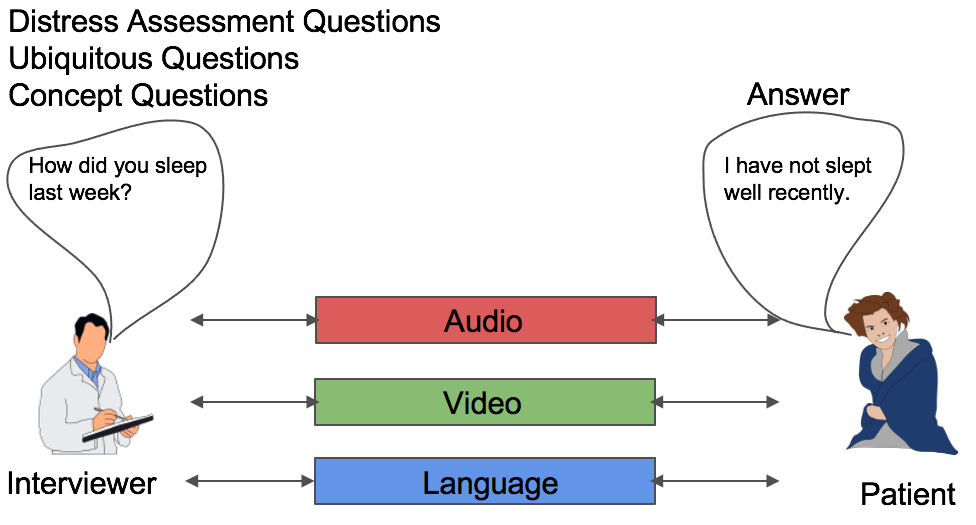

Multimodal Depression Severity Assessment

Depression is a major global health concern and has been recognized as a complex cause of disability that reduces the quality of life and productivity of societies worldwide. Moreover, depression can lead to high risk of suicidal behaviors. Effective, evidence-based treatments for depression exist but many individuals suffering from depression go undetected and therefore untreated. Efforts to increase the accuracy, efficiency, and adoption of depression assessment thus have the potential to minimize human suffering and even save lives. Recent advances in computer sensing technologies provide new opportunities to improve depression assessment, especially in terms of their objectivity, scalability, and accessibility. We aim to develop sensing technologies to automatically measure subtle changes in individuals’ behavior that are related to affective, cognitive, and psychosocial functioning. Their goal is to develop and refine computational tools that automatically measure depression-related behavioral biomarkers and to evaluate the clinical utility of these measurements.

Mobile Data Assessment for the Prediction of Suicide

We use smartphone technology to collect intensive longitudinal data of adolescents at high risk of metal disorders in order to predict short term risk for suicidal thoughts and behaviors. This project focuses on automated techniques to process multimodal data, especially automated analyses of mobile data through applying natural language processing approaches.

Social Computing: Conversational Agents

Turn-changing is an important aspect of smooth conversation, where the roles of speaker and listener change during conversation. For smooth turn-changing, conversational partners should carefully monitor the willingness of other conversational partners to speak and listen (a.k.a turn-management) and consider whether to speak or yield on the basis of their own willingness and that of other partners. Predicting turn-changing can be helpful to conversational agents or robots as they need to know when to speak and take turns at the appropriate time. The feld of human-computer interaction has long been dedicated to computational modeling of turn-changing. In this project, we study turn-management willingness during dyadic interactions with the goal of incorporating the modeling of willingness into the computational model of turn-changing prediction.

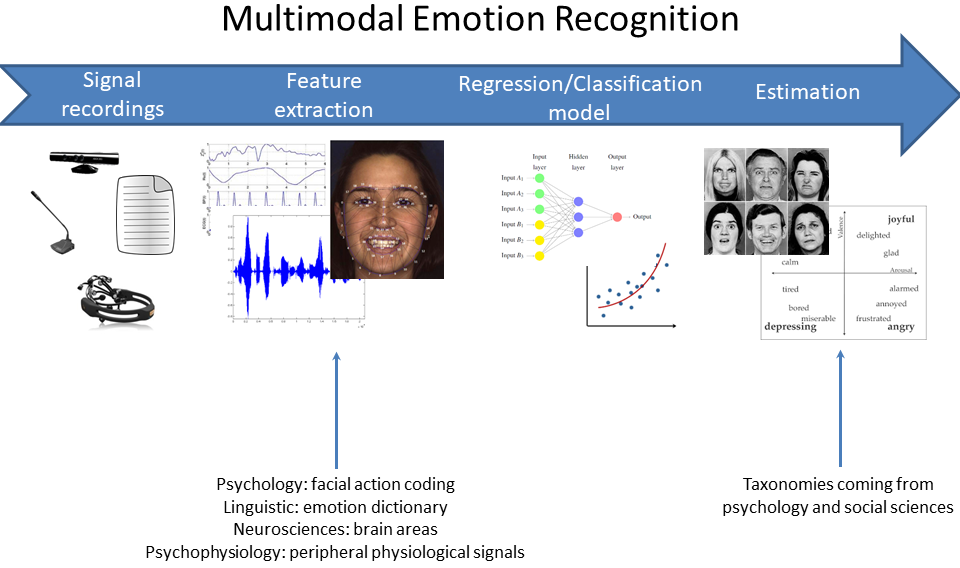

Affective Computing

Affective computing is an interdisciplinary research field at the interaction of computer science, psychology, neuroscience, and cognitive science that aims to develop and build “emotional machines” (i.e., smart systems and devices) that are able to sense, recognize, and interpret human affects to facilitate human-machine/computer interaction.

Multimodal Emotion Recognition

We work on aesthetic and affective movie content analysis. As a result, the difference between perceived and induced emotions of movie audiences is discovered. The results suggest that there is more to be considered than simply assuming that the perceived emotions of the stimuli are consistent with the emotions induced in spectators. Thus, researchers have to take into account this result when designing experiments for affective content analysis research on movies. Furthermore, perceived and induced emotions of the movie audiences are associated with the occurrence of aesthetic highlights in movies. Moreover, we investigate multimodal modelling approaches to predict movie induced emotions from movie content based features, as well as physiological and behavioral reactions of movie audiences. Our experiments reveal that induced emotion recognition benefit from including temporal information and performing multimodal fusion.

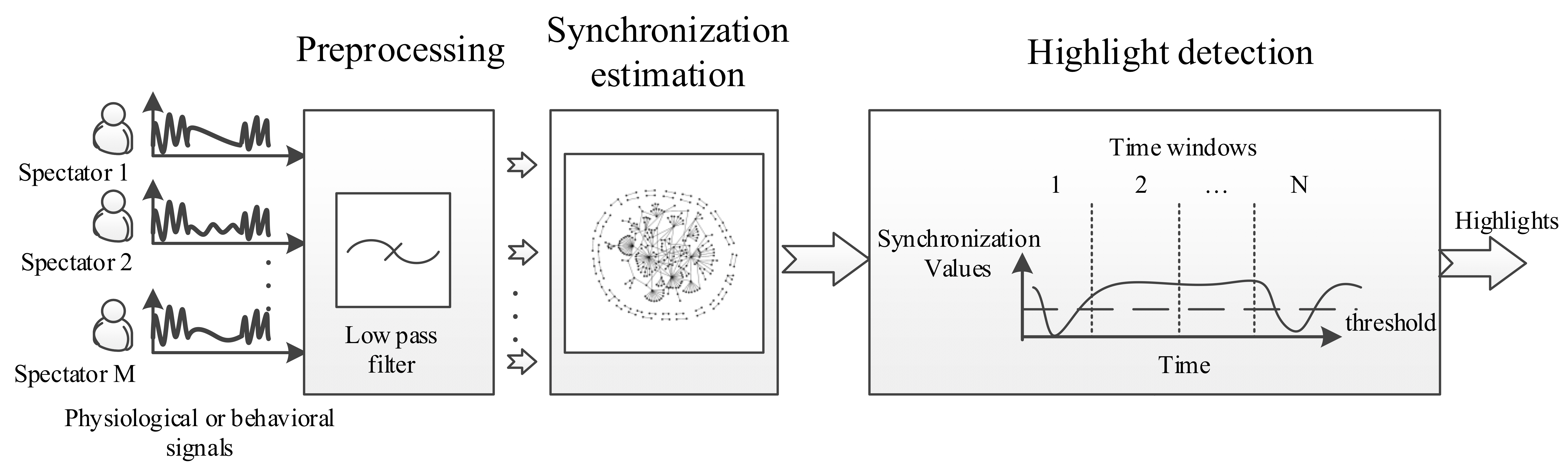

Highlight Detection in Movies

In this project, we work on the analysis of spectators’ physiological and behavioural reactions recorded in a movie theatre. The aesthetic highlights are defined in terms of form (spectacular, subtle) and content (character development, dialogue, theme development) in collaboration a movie critic. In particular, we analyze social interactions during watching movies by using synchronization measures to provide insight into how spectators react to aesthetic movie content in a movie theatre. Furthermore, we investigate aesthetic highlight detection in movies based on different levels of physiological and behavioral synchrony among spectators.